Ben Pettis

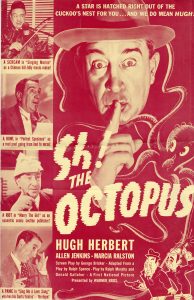

From the 1910s through the 1980s, Hollywood studios promoted their movies through the creation and dissemination of pressbooks—bound pamphlets containing publicity materials, advertising layouts, accessories for sale, and other promotional tactics. These promotional booklets were sent to exhibitors and press outlets, making them vital nodes within the wider networks of film circulation and culture.

One of the WCFTR’s most prominent collections contains thousands of pressbooks, many of which have been digitized and are freely available online. The Media History Digital Library has pressbooks from over 20 major and minor studios, ranging from throughout the 20th century. We are grateful to Matthew and Natalie Bernstein, Kelly and Kimberly Kahl, and Stephen P. Jarchow for their support of this multi-year digitization initiative.

One of our current projects is examining how these publicity materials were used in newspapers across the United States. This project really has it all: extensive digital collections, traditional archival research, and sophisticated computational workflows—including a custom machine learning model to identify and separate individual articles, images, and other components from each pressbook page.

Wait, machine learning? Really?

Yes! But that’s not to say that the WCFTR team hasn’t still been incredibly busy. The use of computational methods doesn’t erase the work of archival research. It just changes what it looks like.

Earlier work found that this smaller-scale segmentation would be necessary to make meaningful comparisons; comparing entire pressbook pages against entire newspaper pages, though less computationally demanding, yielded limited results. Separating the individual articles from each pressbook will enable more detailed comparisons. Our plan is to use AI to detect separate elements from each pressbook page, which would then be compared against articles from newspapers in the Library of Congress’ Chronicling America collection. Before we can apply machine learning to the pressbooks, though, we had to “teach” the computer how to make sense of a pressbook. For several weeks, a team of incredible Comm Arts graduate students (Lore FitzWhittemore, Areyana Proctor, and Olivia Riley) has been carefully reviewing scanned pressbooks and annotating thousands of pages to train a computer vision model.

On paper, this data annotation process is straightforward. The team put together a sample of pressbooks–nearly 4,000 individual pages! Lore, Areyana, and Olivia then reviewed each page and used an online tool to draw boundaries for each object on the page while also categorizing each object (e.g. as an article, image, headline, etc.).

In practice, the data annotation is an incredibly time-consuming and demanding task. Even working at a steady pace, the total process took nearly two months to complete. And while the work is repetitive and tedious, the annotators all recognized how simple decisions – such as whether text like “Turn to the next page for an exciting layout…” should be categorized as an caption or a headline – could have important implications for how the later computer vision model would work. As Lore puts it, “data annotation is not a passive process; the data annotator is constantly making microdecisions about how content should be understood and classified.”

“It really was surprising how infinite a set of micro-decisions appeared as we dug into the work.”

Working with a team of UW-Madison graduate students with working familiarity with the pressbooks was advantageous for ensuring that all three annotators were assessing the pressbook scans similarly. “Getting us all in the same room—material or virtual—was the best way to make sure we all labeled this diverse set of objects consistently,” said Olivia. “It really was surprising how infinite a set of micro-decisions appeared as we dug into the work.”

After the data annotation process was completed, it was Sam Hansen‘s turn to work with the data. They wrote code to process the annotated page scans using the YOLO (You Only Look Once) object detection algorithm. The YOLO algorithm uses a neural network to efficiently “look” at an image and detect separate objects. With adequate training data, these models can accurately classify these objects as well. For the pressbooks project, we hope to use this approach so that a computer can “read” the pressbook page and automatically detect the separate articles and elements from each page. By using the Center for High Throughput Computing’s resources, we will be able to process thousands of pressbooks in a matter of hours—rather than the months and years that it might take to conduct such work entirely by hand. This work is currently ongoing, and we look forward to sharing more in the coming weeks.

High-throughput computing infrastructure and sophisticated machine vision workflows enable us to ask questions about Hollywood pressbooks, including who used them, how, and whether the publicity text, promotional photos, and ads from the pressbooks permeated American newspapers and magazines as intended. Computational methods can intake and parse copious amounts of information, identify large-scale patterns that would be difficult if not impossible to recognize without such a significant scale of data.

But simultaneously, as our annotators became acutely aware of, it is nevertheless an incredibly zoomed-out view of the source material. As Olivia put it, “The super-fast, surface-level work of annotation relies on a certain amount of gut instinct which is often precisely the hegemonic habit critical scholars of identity seek to combat in their analysis.” As the project continues to unfold, we will continue to explore new technologies such as machine learning and computer vision while carefully assessing how to incorporate them alongside traditional methods of archival research.

Keep an eye on the WCFTR blog as we share more project updates. Please be sure to also follow along via our social media or subscribe to our newsletter to keep up with all of the WCFTR’s news and events!

- Newsletter: Subscribe

- Mastodon (Fediverse): @wcftr@hcommons.social

- Facebook: facebook.com/wicenterforfilmandtheaterresearch

- Instagram: @wcftr_archive